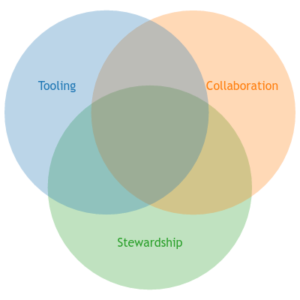

Here’s what I see as ideal “data science” leadership. This post is a nod to the classic Conway Venn Diagram, but more focused on relational skills rather than the specific individual output (much as Tunkelang suggests here).

Tooling skills

Here, it’s most helpful to be comfortable with the family of “data science” tools that is out there, and be unafraid to come up to speed quickly on whatever toolset that your particular team is using (or quickly help them find their way to better standards). This can include:

- “big data” experience with tools like Hadoop, graph dbs, and lambda architectures, including healthy skepticism about where that massively-parallel architecture is necessary

- statistics and machine learning, but (“not Fields medalists”, as Tunkelang points out) how to find existing implementations to jump from, rather than building from scratch

- willingness to climb into research tools and tinker them into the shape that you need

- willingness to do it “the dumb way first” rather than letting the perfect be the enemy of the good

Mostly-Python and Mostly-Java architectures have different strengths here, but it’s been my experience that it’s very hard to build data science analysis stacks that use both. (I prefer the Python stack; a subject for another day.)

Collaboration skills

Nearly all data scientists are working in multidisciplinary teams — or if they’re not, their “data science team” spends much of its time consulting with teams from other disciplines. Data scientists need very good collaboration skills, which include the following:

- the ability to make their specialist partners more effective (not necessarily dazzled by the bleeding edge)

- understanding (and designing, if necessary) the development and release process of useful data science tools and artifacts (see below)

- a willingness to impose code and data discipline (source control, privacy restrictions) on non-coders and other specialists who may not know about those tools

- a low ego, even within “data science specialties”, but the ability to explain those specialties to the lay collaborators

- relative ease and comfort when surrounded by (complementary) greater expertise

Many of these skills are the skills of a good program manager, and many programmers don’t quite keep up on this department. The last one is harder to find than one might expect (though much depends on the data scientist’s own enthusiasm for learning).

Data stewardship

The last part of “data science”, as I see it, is the ownership and care of the data itself. This process requires attention and understanding up and down the data stack, and it’s fundamentally important to follow the Uncle Ben Rule: with great power comes great responsibility. In the simplest form, this includes ownership of the data ingestion pipeline:

- understanding the methods of collection (and the biases that might introduce)

- eagerness and experimental interest in combining inbound information from disparate sources

- keeping provenance and access records when appropriate

- an awareness of how ETL and other pipeline processes may bias the incoming data

Stewardship extends to the input side of things in the form of respecting the data sources, especially if they are human subjects:

- collection itself must be done with clean hands; just because you can get data doesn’t mean you should

- maintaining privacy of the source subjects, through appropriate access controls, anonymization, and working relationships with IRBs and ethics panels

- ensuring that value returns to the data sources (sometimes in the form of the resulting models, sometimes in the form of attribution or credit-assignment, or others)

Finally, stewardship extends to the management of the models (data artifacts) built with the tools the data scientist provides. This has its own set of challenges:

- awareness of how each model class trades off between bias and variance

- understanding how the partners intend to use the models, and whether their intended use matches up with the biases (and variance) in the models

- understanding the potential that the artifacts have to put vulnerable people — even those who did not participate in the data collection — at risk (consider that this study was done in North Carolina).

As a further exercise, there are plenty of useful skills in the partial intersections of the Venn diagram. Skills with Git, for example, are collaboration and tooling but not really stewardship; skills with data collection tool design or archiving might be tooling and stewardship (without much collaboration); negotiating the provenance of datasets from feuding organizations is stewardship and collaboration, without much tooling.

Leave a Reply